Data, death, and your data body.

On how the US government has failed all of us and why we should care.

Do you feel in control of your personal data and likeness? I doubt it.

Americans deserve better.

Your individual data and creative content should belong to you until you decide otherwise. I am not a lawyer or a privacy expert, but I am a creator, a human being, and a reasonable person, and I do know this to be true: You should be the one in control of how you share, sell, or license your content. Your government should serve the public good by helping protect you and your intellectual property. And Americans should be screaming mad about how utterly ineffectual the US government has been in this regard.

Europeans have the General Data Protection Regulation (GDPR) which requires that businesses get explicit consent from people before harvesting and using their personal data.

In contrast to the relatively clear protections in Europe, the US remains stuck with a convoluted mess.

We have a mess of weak regulations: Instead of one simple privacy law that covers everything, we have a lot of different rules for different sectors, making the law harder to understand and leading to many individuals just throwing up their hands and saying, “Whatever, this is too complicated.” In effect, the complex structure of our data privacy laws, such as they are, motivates disengagement at a time when people should be very engaged in a rapidly accelerating technological reality.

We have a mess of crappy user experience: We live in a default 'opt-out' world, where businesses exploit our data until we fight back. Our inboxes are overflowing, and we play an endless game of spam whack-a-mole. This system doesn’t protect us—it wears us down.

We do have the Telephone Consumer Protection Act (TCPA) and the Controlling the Assault of Non-Solicited Pornography and Marketing Act of 2003 (CAN-SPAM) but these don’t solve the problem because in the US, the onus is on every individual to opt out. Any business or scammer can just create new accounts, new business names, new programs - whatever they want! - and keep harassing you, and you, the individual user, are responsible for opting out over and over. Madness.

I am just a career marketing and communications practitioner who has spent over a decade working in tech, literally begging the US government to give us a comprehensive federal law that would help solve the fragmentation and gaping holes we are experiencing today. We simply cannot get there if we allow our government to be run exclusively by people in their 70s and 80s who do not understand the technology that shapes our lives and data.

The American Privacy Rights Act (APRA) is a start, but it’s not enough.

Adjusting our norms to embrace “opt-in” vs “opt-out” defaults is necessary but individuals also need real recourse for when corporations ignore our wishes — and let’s face it, that happens plenty.

We have seen, over and over again, that “self-regulation” of the tech industry does not work. It is a joke. It has failed.

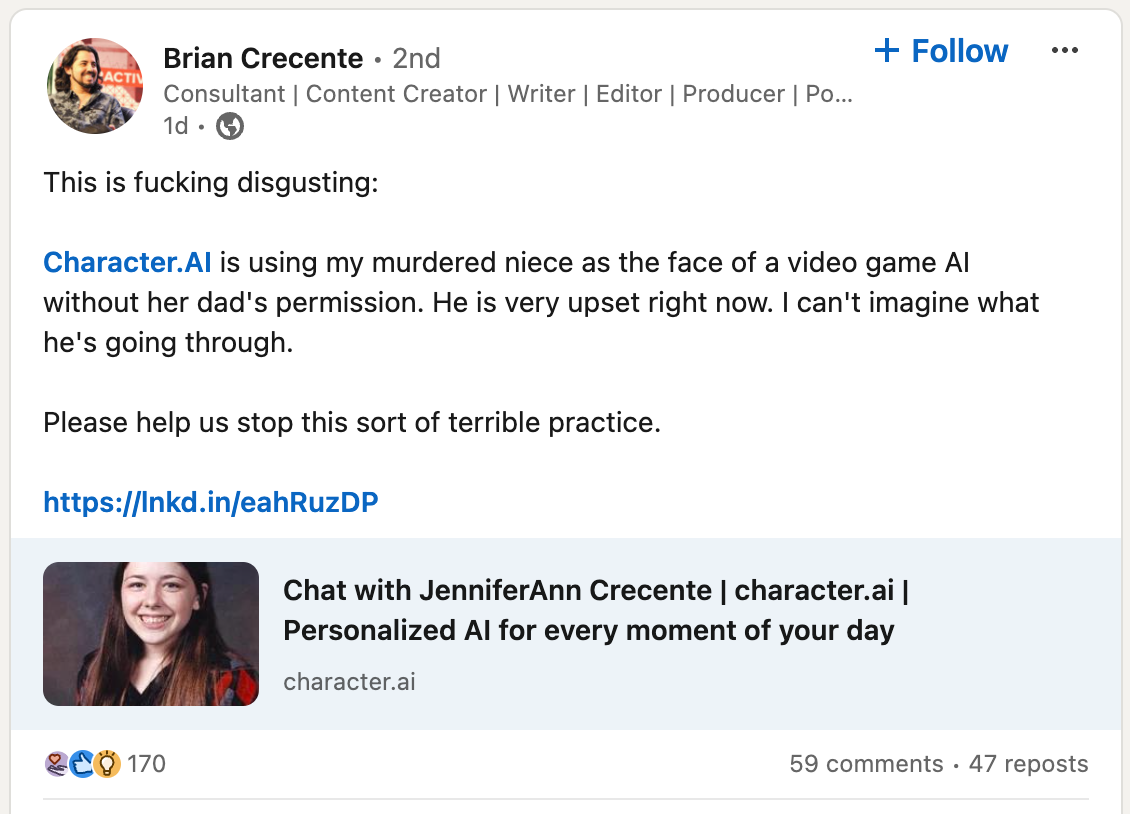

A case in point: This morning, I read about a company called Character.AI using the image of a murdered girl as the face of a video game AI without her family’s consent.

This type of increasingly common violation simply shouldn’t happen. Yet, it has and it will continue to happen. There is no moral or ethical bottom to this well.

Beyond the immediate opt in vs out paradigm shift, we need to revisit and clarify the data and privacy rights of the dead.

Do you know what happens to your data after you die? Do you imagine it’s up to you?

It might surprise you to hear there are currently no federal laws in the US regarding the rights of the deceased. Post-mortem privacy protection is not a thing that currently exists. This legal category is wholly a “creature of state law” which means it is complex, confusing, inconsistent, and still emerging in the US. In September, the California state senate passed AB 1836, a bill restricting the use of AI tech to replicate the likenesses of dead celebrities. SAG-AFTRA has praised the passing of the bill, which is great, but most of us don’t have union cards and we do generate huge amounts of personal data that remains unprotected.

Should Meta, Alphabet, Apple, Microsoft, and other tech giants have the final word on what happens to our millions of photos, files, and messages after we die? Currently, it seems, they do. This should alarm everyone interacting these platforms, given that there is zero incentive for any business to act as an ethical or responsible manager of millions of individual estates, and overwhelming incentives for businesses to use the accumulated “data bodies”1 of individuals for financial gain, regardless of the rights, wishes, or requests of the deceased or their survivors.

We desperately need software designers and technology business leaders to understand and care about basic ethics. And we need a government sufficiently independent from corporate interests to protect us from the inevitable situation in which we now live, where powerful tech leaders like Mark Zuckerberg’s oft-repeated motto of “move fast and break things” extends to every facet of our private and personal lives.

Hemant Taneja of General Catalyst declared the “move fast and break things” era “over” in a Harvard Business Review article back in 2019, though the evidence, in the form of invasive AI applications across sectors, shows otherwise.

In that HBR piece, Taneja wrote, “‘Minimum viable products’ must be replaced by ‘minimum virtuous products’—new offerings that test for the effect on stakeholders and build in guards against potential harms.”

If only this statement were more than ethicswashing and wishful thinking. The American government must step up where technology leaders have proven time and again that they simply will not. And we all know what that means: It’s all of our problem and we must demand better.

Endnotes

Massive E-Learning Platform Udemy Gave Teachers a Gen AI 'Opt-Out Window'. It's Already Over. Teachers are surprised they have been opted into having their classes scraped for AI training. [404media.co]

The use of user-generated content to train AI models has been a consistent controversy across the internet over the last year or so, and, almost uniformly, platforms who are developing AI have decided to opt their users in by default. … Over the last few months, Udemy has been making posts on its community message board and website about the generative AI program. In particular, it has been telling instructors that its generative AI program will be good for its human instructors, and that “we respect intellectual property rights. We use AI responsibly and in support of our mission. We win together.

CA bill restricts AI replications of dead performers [ABC7news.com]

"AB 1836 is specifically aimed at providing postmortem right of publicity protection when it comes to digital replicas," said Joseph Lawlor, a trademark attorney at Haynes Boone. "These are generally AI-generated voice or video of performers." Lawlor added that only around half of the states provide protections for a performer's likeness after they die. Even so, he said growing technology needs to be reflected in existing right of publicity laws.

How Ian Holm’s Return in ‘Alien: Romulus’ Brings SAG-AFTRA’s Digital Replicas Work Into Focus [Variety]

Holm’s appearance in the latest “Alien” installment underscores the role of the SAG-AFTRA collective bargaining agreement, which requires that the performer’s estate grant consent before a digital replica is created.

Americans Deserve More Than the Current American Privacy Rights Act [EFF]

The favorite tool of companies looking to get rid of privacy lawsuits is to bury provision in their terms of service that force individuals into private arbitration and prevent class action lawsuits. The APRA does not address class action waivers and only prevents forced arbitration for children and people who allege “substantial” privacy harm. In addition, statutory damages and enforcement in state courts is essential, because many times federal courts still struggle to acknowledge privacy harm as real—relying instead on a cramped view that does not recognize privacy as a human right.

Artists Allege Meta’s AI Data Deletion Request Process Is a ‘Fake PR Stunt’ Last summer, Meta began taking requests to delete data from its AI training. Artists say this new system is broken and fake. Meta says there is no opt-out program. [Wired]

[In August 2023], when Meta began allowing people to submit requests to delete personal data from third parties used to train Meta’s generative AI models, many artists and journalists interpreted this new process as Meta’s very limited version of an opt-out program. CNBC explicitly referred to the request form as an “opt-out tool.” This is a misconception. In reality, there is no functional way to opt out of Meta’s generative AI training.

“Data bodies have more impact on your life than your real life and your real body. There’s no difference between what happens online and what happens in our real lives.”

—Laila Shereen Sakr aka VJ Um Amel

This term “data body” was coined, as far as I know, by artist, scholar, and feminist technologist Laila Shereen Sakr, PhD, who also goes by the moniker VJ Um Amel (Arabic for “Video Jockey Mother of Hope”). She is certainly the person who explained the concept to me, when I had the good fortune to interview her on behalf of CODAME in 2018. Sakr is currently an Associate Professor of Media Studies and Director of Undergraduate Studies at UCSB and is the author of the book Arabic Glitch: Technoculture, Data Bodies, and Archives (Stanford University Press, 2023). As an artist, she manipulates the aesthetics of glitch to articulate concepts of embodied data and global social justice. ↩